The CoreSAEP project (Computational Reasoning for Socially Adaptive Electronic Partners, 2014-2022) is about software that understands norms and values of people.

At least this was the lay person’s summary I came up with at the start of the project. While it made for a nice tagline, I later started using the somewhat humbler phrasing of software that takes into account norms and values of people. The question whether digital technology can ever be considered to truly understand people is I believe an open question that we are nowhere near to answering. A further needed refinement I have come to realize is that our focus is on individuals and their behavior, leading to the following phrasing of what we study in the project: software that takes into account personal norms and values.

NWO Vidi Personal Grant

The CoreSAEP project is a Vidi personal grant (800K), funded by the Dutch funding agency NWO. It was awarded to me in 2014, however due to illness I wasn’t able to start until 2017. It is a 5-year project, which was concluded in 2022. The project has funded one PhD candidate (Dr. Ilir Kola) and two postdocs (Dr. Malte Kliess and Dr. Myrthe Tielman), who were co-supervised with Prof. Dr. Catholijn Jonker.

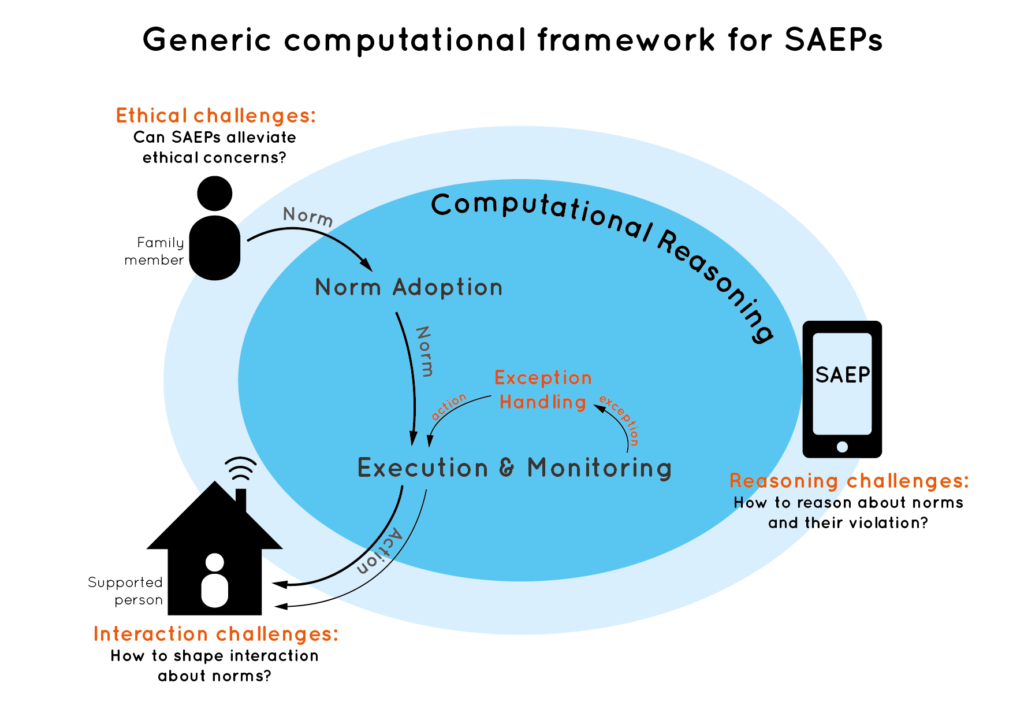

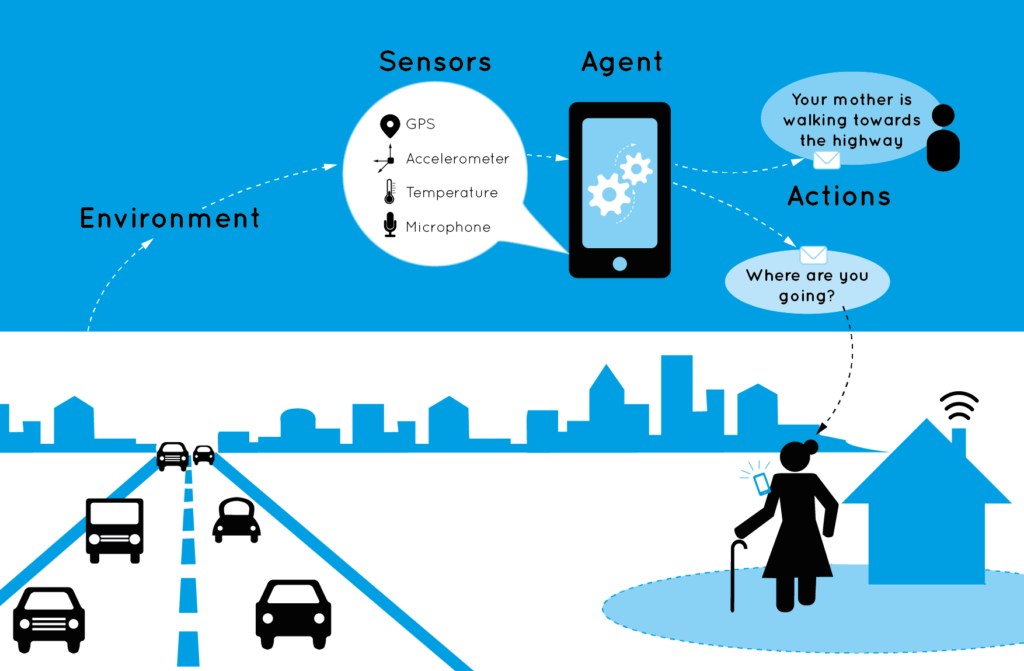

The aim of this project was to develop a new computational reasoning framework for Socially Adaptive Electronic Partners (SAEPs) that support people in their daily lives without people having to adapt their way of living to the software. We argued that this will require software that is flexible so that it can adapt to diverse and evolving rules of behavior (norms) of people in unforeseen circumstances.

We identified three core challenges in realising SAEPs as depicted in the graphic below and further described in our AAMAS vision paper Creating Socially Adaptive Electronic Partners: Interaction, Reasoning and Ethical Challenges (2015):

- Interaction challenges: How to shape interaction about norms?

- Reasoning challenges: How to reason about norms and their violation?

- Ethical challenges: Can SAEPs alleviate ethical concerns?

We used the term Socially Adaptive Computing to refer to software which not only takes into account human norms and values, but is also able to fine-tune its actions based on these concepts. Furthermore, since these electronic partners will be directly interacting with people, we developed formal models and reasoning techniques to ensure reliability (guarantees on the behavior of the software) and resilience (flexibility in unforeseen circumstances).

Project results

An overview of the insights we have obtained throughout the project can be found in the short video I made for the concluding symposium of the project: ‘Being human in the digital society: on technology, norms and us’ (slides). I also discuss some of these questions in a piece (in Dutch) for the platform Beste-ID.nl: In gesprek met machines / in conversation with machines. Furthermore, we have made an ‘infograpic’ that presents the project’s results in an accessible way (CoreSAEP Infographic English / CoreSAEP Infographic Dutch, illustrations and design by Just Julie).

The PhD candidate of the CoreSAEP project, Dr. Ilir Kola, successfully defended his PhD thesis Enabling Social Situation Awareness in Support Agents (URL) on November 21st, 2022, at TU Delft.

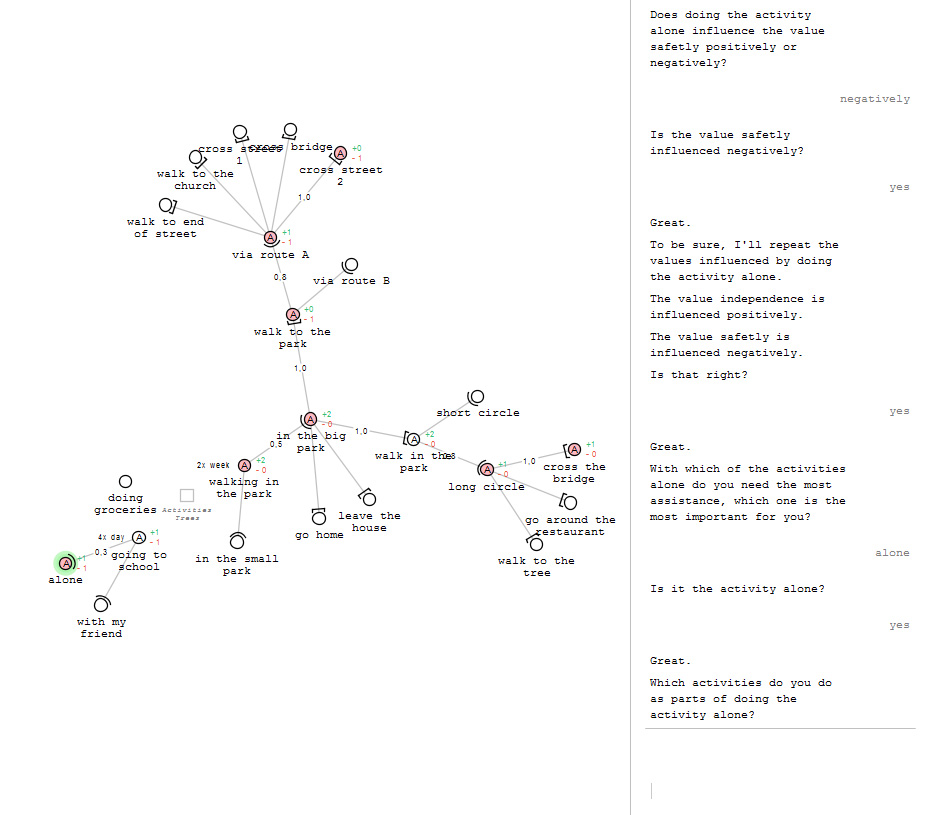

Collaboration Czech Technical University Prague

In collaboration with the Department of Computer Graphics and Interaction we have been working on applying our research on action hierarchies and values to the domain of a navigation assistant for visually impaired people. This application takes the form of a system designed to ask users questions about their daily travelling activities. With the information from the user, the system builds an action hierarchy, describing the users daily travelling activities and the values they influence.

The system was demoed at the ICTOpen 2018. The system is described in more detail in this demo proposal. The code for the conversational agent can be found here. The paper on ‘Misalignment in Semantic User Model Elicitation via Conversational Agents: A case study in navigation support for visually impaired people’ which resulted from this research can be found here.

Publications

2023:

- What Can I Do to Help You? – A Formal Framework for Agents Reasoning About Behavior Change Support for People (PDF, URL, Data)

Frontiers in Artificial Intelligence and Applications, Ebook Volume 368: HHAI 2023: Augmenting Human Intellect, pages 168-181, 2023. IOS Press.

Myrthe L. Tielman, M. Birna Van Riemsdijk, Michael Winikoff - Using psychological characteristics of situations for social situation comprehension in support agents (PDF, URL, Data)

Autonomous Agents and Multi-Agent Systems 37(2):24, 2023. Springer-Verlag.

Ilir Kola, Catholijn M. Jonker, M. Birna van Riemsdijk

2022:

- Misalignment in Semantic User Model Elicitation via Conversational Agents: A Case Study in Navigation Support for Visually Impaired People (PDF, URL, Data)

International Journal of Human–Computer Interaction, 38(18-20): 1909-1925, 2022. © Taylor & Francis.

Jakub Berka, Jan Balata, Catholijn M. Jonker, Zdenek Mikovec, M. Birna van Riemsdijk, Myrthe L. Tielman - Toward Social Situation Awareness in Support Agents (PDF, URL Editor, PDF PURE)

IEEE Intelligent Systems, 37(5): 50-58, 2022. © IEEE.

Ilir Kola, Pradeep K. Murukannaiah, Catholijn M. Jonker, M. Birna van Riemsdijk

2021:

- Predicting the Priority of Social Situations for Personal Assistant Agents (PDF, Data, Code)

In The 23rd International Conference on Principles and Practice of Multi-Agent Systems (PRIMA’20), To appear in LNCS. 2021. © Springer-Verlag

Ilir Kola, Myrthe Tielman, Catholijn M. Jonker and M. Birna van Riemsdijk

2020:

- Grouping Situations Based on their Psychological Characteristics Gives Insight into Personal Values (PDF, Data, URL)

In Proceedings of the 12th International Workshop on Modelling and Reasoning in Context (MRC 2020), pages 7-16. CEUR Workshop Proceedings. 2020.

Ilir Kola, Catholijn M. Jonker, Myrthe Tielman and M. Birna van Riemsdijk - Linking actions to value categories – a first step in categorization for easier value elicitation (PDF)

In Proceedings of the 11th International Workshop on Modelling and Reasoning in Context (MRC 2020), pages 17-22 CEUR Workshop Proceedings. 2020.

Djosua D.M. Moonen and Myrthe L. Tielman - Who’s that? – Social Situation Awareness for Behaviour Support Agents: A Feasibility Study (PDF, Code, Data, URL)

In Engineering Multi-Agent Systems: Seventh International Workshop (EMAS’19). volume vol 12058 of LNCS, pages 127-151. 2020. © Springer-Verlag.

Ilir Kola, Catholijn M. Jonker and M. Birna van Riemsdijk

2019:

- From good intentions to behaviour change: Probabilistic Feature Diagrams for Behaviour Support Agents (PDF, Code, URL)

In The 22nd International Conference on Principles and Practice of Multi-Agent Systems (PRIMA’19). volume 11873 of LNCS, pages 354-369. 2019. © Springer-Verlag.

Malte Kliess, Marielle Stoelinga, M. Birna van Riemsdijk

2018:

- Modelling the Social Environment: Towards Socially Adaptive Electronic Partners (PDF, URL)

In Proceedings of the 10th International Workshop on Modelling and Reasoning in Context (MRC 2018). 2018.

Ilir Kola, Catholijn M. Jonker, M. Birna van Riemsdijk - What Should I Do? Deriving Norms from Actions, Values and Context (PDF, URL)

In Proceedings of the 10th International Workshop on Modelling and Reasoning in Context (MRC 2018). 2018.

Myrthe L. Tielman, Catholijn M. Jonker, M. Birna van Riemsdijk - A Temporal Logic for Modelling Activities of Daily Living

In Proceedings of the 25th International Symposium on Temporal Representation and Reasoning (TIME 2018). Forthcoming.

Malte S. Kließ, Catholijn M. Jonker, M. Birna van Riemsdijk

2017:

- Requirements for a Temporal Logic of Daily Activities for Supportive Technology (PDF, URL, BIB)

In Workshop on Computational Accountability and Responsibility in Multiagent Systems (CARe-MAS 2017 at PRIMA’17). 2017.

Malte Kliess, M. Birna van Riemsdijk - Action Identification Hierarchies for Behaviour Support Agents (PDF, URL, BIB)

In Third Workshop on Cognitive Knowledge Acquisition and Applications (Cognitum 2017 at IJCAI’17). 2017.

Pietro Pasotti, Catholijn M. Jonker, M. Birna van Riemsdijk

2016:

- Representing human habits: towards a habit support agent (PDF, BIB)

In Proceedings of the 10th International workshop on Normative Multiagent Systems (NorMAS’16). 2016.

Pietro Pasotti, M. Birna van Riemsdijk, Catholijn M. Jonker

2015:

- Creating Socially Adaptive Electronic Partners: Interaction, Reasoning and Ethical Challenges (PDF, URL, BIB)

In Proceedings of the fourteenth international joint conference on autonomous agents and multiagent systems (AAMAS’15), pages 1201-1206. 2015. © IFAAMAS.

M. Birna van Riemsdijk, Catholijn M. Jonker, Victor Lesser - A Semantic Framework for Socially Adaptive Agents: Towards strong norm compliance (PDF, URL, BIB)

In Proceedings of the fourteenth international joint conference on autonomous agents and multiagent systems (AAMAS’15), pages 423-432. 2015. © IFAAMAS.

M. Birna van Riemsdijk, Louise Dennis, Michael Fisher, Koen V. Hindriks

2013:

- Agent reasoning for norm compliance: a semantic approach (PDF, BIB)

In Proceedings of the twelfth international joint conference on autonomous agents and multiagent systems (AAMAS’13), pages 499-506. 2013. © IFAAMAS.

M. Birna van Riemsdijk, Louise Dennis, Michael Fisher, Koen V. Hindriks

Origin story

Organisation-Aware Agents

The project has its origins in largely curiosity-driven research I initiated on autonomous software agents that are capable of reasoning about the organisation in which they function: organisation-aware agents (2009). I was interested in investigating whether my PhD research on programming (single) software agents could be connected with a large body of work in the multiagent systems area on the specification of agent organisations. These agent organisations are aimed at coordinating and regulating multiagent systems in order to make them function more effectively, inspired by the functioning of human organisations. However, there was very little work on how individual agents could somehow “understand” these organisational specifications and take them into account in their decision making.

I realised that a crucial step in ensuring that agents properly interpret these organisational specifications, is to specify in a precise way what these specifications mean in terms of expected agent behaviour. That is, we needed a formal specification and semantics. This led to a collaboration with researchers from the MOISE agent organization framework, from which the paper Formalizing Organizational Constraints: A Semantic Approach (2010) resulted. However, while further studying organisational agent frameworks, I realised things get quite complex quite quickly due to their large number of concepts, such as groups, roles and their relations, goals and missions, etc. Therefore, to make progress without getting lost in this complexity, I decided to focus for the time being solely on the concept of norms as descriptions of social expectations on agent behaviour.

Socially Adaptive Electronic Partners (SAEPs)

What finally laid the foundations for the awarded Vidi project were three further important insights:

- Norms & Temporal logic: Expectations on agent behaviour can naturally be formulated in temporal logic, as we did in the above mentioned 2010 paper on MOISE. These temporal specifications can then be interpreted as norms describing desired agent behaviour. Agents that adapt to norms may then be specified using techniques from executable temporal logic, which is used to generate behaviour from a temporal specification. In collaboration with dr. Louise Dennis and prof. Michael Fisher from Liverpool and dr. Koen Hindriks (Delft) we worked out this idea in two publications on norm compliance (2013, 2015).

- Norms & Supportive technology: Agents‘ ability to adapt to norms is especially important when these agents support people in their daily lives. Without such adaptation, software is rigid which leads to violation of important norms of people. This insight was inspired by research in the Interactive Intelligence section led by prof. Catholijn Jonker at TU Delft on Human-Agent Robot Teamwork (HART).

- Norms & Human values: The underlying reason why it is important for supportive technology to attempt to adhere to people’s norms is that these norms reflect people’s values – i.e., what people find important in life. We obtained this insight through the research of my PhD student Alex Kayal who worked on the COMMIT/ project on normative social applications (PhD thesis (2017)), in particular focusing on location sharing between parents and children. The importance of values emerged from a user study (2013) we conducted to better understand this application context.

These ingredients, i.e., the technical approach, the type of application, and the “why” behind all of this, is what eventually led to the writing of the awarded project.